Below are all of the upcoming 2009 MLA sessions related to new media and the digital humanities. Am I missing something? Let me know in the comments and I’ll add it to the list. You may also be interested in following the Digital Humanities/MLA list on Twitter. (And if you are on Twitter and going to the MLA, let Bethany Nowviskie know, and she’ll add you to the list.)

MONDAY, DECEMBER 28

116. Play the Movie: Computer Games and the Cinematic Turn

8:30–9:45 a.m., 411–412, Philadelphia Marriott

Presiding: Anna Everett, Univ. of California, Santa Barbara; Homay King, Bryn Mawr Coll.

- “The Flaneur and the Space Marine: Temporal Distention in First-Person Shooters,” Jeff Rush, Temple Univ., Philadelphia

- “Viral Play: Internet Humor, Viral Marketing, and the Ubiquitous Gaming of The Dark Knight,” Ethan Tussey, Univ. of California, Santa Barbara

- “Playing the Cut Scene: Agency and Vision in Shadow of the Colossus,” Mark L. Sample, George Mason Univ.

- “Suture and Play: Machinima as Critical Intimacy for Game Studies,” Aubrey Anable, Hamilton Coll.

120. Virtual Worlds and Pedagogy

8:30–9:45 a.m., Liberty Ballroom Salon C, Philadelphia Marriott

Presiding: Gloria B. Clark, Penn State Univ., Harrisburg

- “Rhetorical Peaks,” Matt King, Univ. of Texas, Austin

- “Virtual Theater History: Teaching with Theatron,” Mark Childs, Warwick Univ.; Katherine A. Rowe, Bryn Mawr Coll.

- “Realms of Possibility: Understanding the Role of Multiuser Virtual Environments in Foreign Language Curricula,” Julie M. Sykes, Univ. of New Mexico

- “Information versus Content: Second Life in the Literature Classroom,” Bola C. King, Univ. of California, Santa Barbara

- “Literature Alive,” Beth Ritter-Guth, Hotchkiss School

- “Virtual World Building as Collaborative Knowledge Production: The Online Crystal Palace,” Victoria E. Szabo, Duke Univ.

- “Teaching in Virtual Worlds: Re-Creating The House of Seven Gables in Second Life,” Mary McAleer Balkun, Seton Hall Univ.

- “3-D Interactive Multimodal Literacy and Avatar Chat in a College Writing Class,” Jerome Bump, Univ. of Texas, Austin

For abstracts and possibly video clips, visit www.fabtimes.net/virtpedagog/.

141. Locating the Literary in Digital Media

8:30–9:45 a.m., Liberty Ballroom Salon A, Philadelphia Marriott

- “‘A Breach, [and] an Expansion’: The Humanities and Digital Media,” Dene M. Grigar, Washington State Univ., Vancouver

- “Locating the Literary in New Media: From Key Words and Metatags to Network Recognition and Institutional Accreditation,” Joseph Paul Tabbi, Univ. of Illinois, Chicago

- “Digital, Banal, Residual, Experimental,” Paul Benzon, Rutgers Univ., New Brunswick

- “Genre Discovery: Literature and Shared Data Exploration,” Jeremy Douglass, Univ. of California, San Diego

170. Value Added: The Shape of the E-Journal

10:15–11:30 a.m., Liberty Ballroom Salon C, Philadelphia Marriott

Speakers: Cheryl E. Ball, Kairos, Keith Dorwick, Technoculture, Andrew Fitch and Jon Cotner, Interval(le)s, Kevin Moberly, Technoculture, Julianne Newmark, Xchanges, Eric Dean Rasmussen and Joseph Paul Tabbi, Electronic Book Review

The journals represent a wide range of audiences and technologies. The speakers will display the work that can be done with electronic publications.

For summaries, visit www.ucs.louisiana.edu/~kxd4350/ejournal.

212. Language Theory and New Communications Technologies

12:00 noon–1:15 p.m., Jefferson, Loews

Presiding: David Herman, Ohio State Univ., Columbus

- “Learning around Place: Language Acquisition and Location-Based Technologies,” Armanda Lewis, New York Univ.

- “Constructing the Digital I: Subjectivity in New Media Composing,” Jill Belli, Graduate Center, City Univ. of New York

- “French and Spanish Second-Person Pronoun Use in Computer-Mediated Communication,” Lee B. Abraham, Villanova Univ.; Lawrence Williams, Univ. of North Texas

245. Old Media and Digital Culture

1:45–3:00 p.m., Washington C, Loews

Presiding: Reinaldo Carlos Laddaga, Univ. of Pennsylvania

- “Paper: The Twenty-First-Century Novel,” Jessica Pressman, Yale Univ.

- “First Publish, Then Write,” Craig Epplin, Reed Coll.

- “Digital Literature and the Brazilian Historic Avant-Garde: What Is Old in the New?” Eduardo Ledesma, Harvard Univ.

For abstracts, write to craig.epplin@gmail.com.

254. Web 2.0: What Every Student Knows That You Might Not

1:45–3:00 p.m., Liberty Ballroom Salon C, Philadelphia Marriott

Presiding: Laura C. Mandell, Miami Univ., Oxford

Speakers: Carolyn Guertin, Univ. of Texas, Arlington; Laura C. Mandell; William Aufderheide Thompson, Western Illinois Univ.

For workshop materials, visit www.mla.org/web20.

264. Media Studies and the Digital Scholarly Present

1:45–3:00 p.m., 411–412, Philadelphia Marriott

Presiding: Kathleen Fitzpatrick, Pomona Coll.

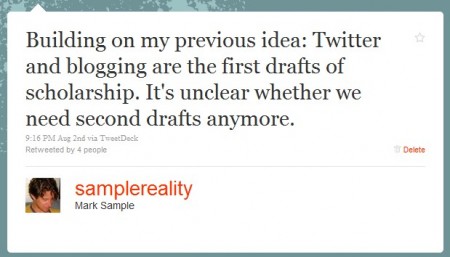

- “Blogging, Scholarship, and the Networked Public Sphere,” Chuck Tryon, Fayetteville State Univ.

- “The Decline of the Author, the Rise of the Janitor,” David Parry, Univ. of Texas, Dallas

- “Remixing Dada Poetry in MySpace: An Electronic Edition of Poetry by the Baronness Elsa von Freytag-Loringhoven in N-Dimensional Space,” Tanya Clement, Univ. of Maryland, College Park

- “Right Now: Media Studies Scholarship and the Quantitative Turn,” Jeremy Douglass, Univ. of California, San Diego

For abstracts, links, and related material, visit http://mediacommons.futureofthebook.org/mla2009 after 1 Dec.

265. Getting Funded in the Humanities: An NEH Workshop

1:45–3:45 p.m., Liberty Ballroom Salon A, Philadelphia Marriott

Presiding: John David Cox, National Endowment for the Humanities; Jason C. Rhody, National Endowment for the Humanities

This workshop will highlight recent awards and outline current funding opportunities. In addition to emphasizing grant programs that support individual and collaborative research and education, this workshop will include information on new developments such as the NEH’s Office of Digital Humanities. A question-and-answer period will follow.

268. Lives in New Media

3:30–4:45 p.m., 305–306, Philadelphia Marriott

Presiding: William Craig Howes, Univ. of Hawai‘i, Mānoa

- “Blogging the Pain: Disease and Grief on the Internet,” Bärbel Höttges, Univ. of Mainz

- “New Media and the Creation of Autistic Identities,” Ann Jurecic, Rutgers Univ., New Brunswick

- “‘25 Random Things about Me’: Facebook and the Art of the Autobiographical List,” Theresa A. Kulbaga, Miami Univ., Hamilton

322. Looking for Whitman: A Cross-Campus Experiment in Digital Pedagogy

7:15–8:30 p.m., 410, Philadelphia Marriott

Presiding: Matthew K. Gold, New York City Coll. of Tech., City Univ. of New York

Speakers: D. Brady Earnhart, Univ. of Mary Washington; Matthew K. Gold; James Groom, Univ. of Mary Washington; Tyler Brent Hoffman, Rutgers Univ., Camden; Karen Karbiener, New York Univ.; Mara Noelle Scanlon, Univ. of Mary Washington; Carol J. Singley, Rutgers Univ., Camden

Visit the project Web site, http://lookingforwhitman.org.

338. Beyond the Author Principle

7:15–8:30 p.m., Liberty Ballroom Salon C, Philadelphia Marriott

Presiding: Bruce R. Smith, Univ. of Southern California

- “English Broadside Ballad Archive: A Digital Home for the Homeless Broadside Ballad,” Patricia Fumerton, Univ. of California, Santa Barbara; Carl Stahmer, Univ. of Maryland, College Park

- “The Total (Digital) Archive: Collecting Knowledge in Online Environments,” Katherine D. Harris, San José State Univ.

- “Displacing ‘Shakespeare’ in the World Shakespeare Encyclopedia,” Katherine A. Rowe, Bryn Mawr Coll.

TUESDAY, DECEMBER 29

361. Making Research: Limits and Barriers in the Age of Digital Reproduction

8:30–9:45 a.m., 411–412, Philadelphia Marriott

Presiding: Robin G. Schulze, Penn State Univ., University Park

- “The History and Limitations of Digitalization,” William Baker, Northern Illinois Univ.

- “Moving Past the Hype of Hypertext: Limits of Scholarly Digital Ventures,” Elizabeth Vincelette, Old Dominion Univ.

- “Transforming the Study of Australian Literature through a Collaborative eResearch Environment,” Kerry Kilner, Univ. of Queensland

- 4. “A Proposed Model for Peer Review of Online Publications,” Jan Pridmore, Boston Univ.

413. Has Comp Moved Away from the Humanities? What’s Lost? What’s Gained?

10:15–11:30 a.m., 411–412, Philadelphia Marriott

Presiding: Krista L. Ratcliffe, Marquette Univ.

- “Turning Composition toward Sovereignty,” John L. Schilb, Indiana Univ., Bloomington

- “Composition and the Preservation of Rhetorical Traditions in a Global Context,” Arabella Lyon, Univ. at Buffalo, State Univ. of New York

- “What Composition Can Learn from the Digital Humanities,” Olin Bjork, Georgia Inst. of Tech.; John Pedro Schwartz, American Univ. of Beirut

For abstracts, visit www.marquette.edu/english/ratcliffe.shtml.

420. Digital Scholarship and African American Traditions

10:15–11:30 a.m., 307, Philadelphia Marriott

Speaker: Anna Everett, Univ. of California, Santa Barbara

For abstracts, visit www.ach.org/mla/mla09/ after 1 Dec.

490. Links and Kinks in the Chain: Collaboration in the Digital Humanities

1:45–3:00 p.m., 410, Philadelphia Marriott

Presiding: Tanya Clement, Univ. of Maryland, College Park

Speakers: Jason B. Jones, Central Connecticut State Univ.; Laura C. Mandell, Miami Univ., Oxford; Bethany Nowviskie, Univ. of Virginia; Timothy B. Powell, Univ. of Pennsylvania; Jason C. Rhody, National Endowment for the Humanities

For abstracts, visit http://lenz.unl.edu/mla09 after 1 Dec.

512. Journal Ranking, Reviewing, and Promotion in the Age of New Media

3:30–4:45 p.m., Liberty Ballroom Salon C, Philadelphia Marriott

Presiding: Meta DuEwa Jones, Univ. of Texas, Austin

Speakers: Daniel Brewer, L’Esprit Créateur; Mária Minich Brewer, L’Esprit Créateur; Martha J. Cutter, MELUS; Mike King, New York Review of Books; Joycelyn K. Moody, African American Review; Bonnie Wheeler, Council of Editors of Learned Journals

560. (Re)Framing Transmedial Narratives

7:15–8:30 p.m., Congress A, Loews

Presiding: Marc Ruppel, Univ. of Maryland, College Park

- “From Narrative, Game, and Media Studies to Transmodiology,” Christy Dena, Univ. of Sydney

- “To See a Universe in the Spaces In Between: Migratory Cues and New Narrative Ontologies,” Marc Ruppel

- “Works as Sites of Struggle: Negotiating Narrative in Cross-Media Artifacts,” Burcu S. Bakioglu, Indiana Univ., Bloomington

For abstracts, visit www.glue.umd.edu/~mruppel/Ruppel_MLA2009_SpecialPanelAbstracts.docx.

575. Gaining a Public Voice: Alternative Genres of Publication for Graduate Students

7:15-8:30 p.m., Room 405, Philadelphia Marriott

Presiding: Jens Kugele, Georgetown Univ.

- “Animating Audiences: Digital Publication Projects and Their Publics,” Jentery Sayers, Univ. of Washington, Seattle

- “Blogging Beowulf,” Mary Kate Hurley, Columbia Univ.

- “Hope Is Not a Husk but Persists in and as Us: A Proposal for Graduate Collaborative Publication,” Emily Carr, Univ. of Calgary

- “The Alternative as Mainstream: Building Bridges,” Katherine Marie Arens, Univ. of Texas, Austin

WEDNESDAY, DECEMBER 30

625. Making Research: Collaboration and Change in the Age of Digital Reproduction

8:30–9:45 a.m., Grand Ballroom Salon L, Philadelphia Marriott

Presiding: Maura Carey Ives, Texas A&M Univ., College Station

- “What Is Digital Scholarship? The Example of NINES,” Andrew M. Stauffer, Univ. of Virginia

- “Critical Text Mining; or, Reading Differently,” Matthew Wilkens, Rice Univ.

- “‘The Apex of Hipster XML GeekDOM’: Using a TEI-Encoded Dylan to Help Understand the Scope of an Evolving Community in Digital Literary Studies,” Lynne Siemens, Univ. of Victoria; Raymond G. Siemens, Univ. of Victoria

632. Quotation, Sampling, and Appropriation in Audiovisual Production

8:30–9:45 a.m., 406, Philadelphia Marriott

Presiding: Nora M. Alter, Univ. of Florida; Paul D. Young, Vanderbilt Univ.

- “‘We the People’: Imagining Communities in Dave Chappelle’s Block Party,” Badi Sahar Ahad, Loyola Univ., Chicago

- “Pinning Down the Pinup: The Revival of Vintage Sexuality in Film, Television, and New Media,” Mabel Rosenheck, Univ. of Texas, Austin

- “Playful Quotations,” Lin Zou, Indiana Univ., Bloomington

- “For the Record: The DJ Is a Critic, ‘Constructing a Sort of Argument,’” Mark McCutcheon, Athabasca Univ.

643. New Models of Authorship

8:30–9:45 a.m., Grand Ballroom Salon K, Philadelphia Marriott

Presiding: Carolyn Guertin, Univ. of Texas, Arlington

- “Authors for Hire: Branded Entertainment’s Challenges to Legal Doctrine and Literary Theory,” Zahr Said Stauffer, Univ. of Virginia

- “The Digital Archive in Motion: Data Mining as Authorship,” Paul Benzon, Temple Univ., Philadelphia

- “Scandalous Searches: Rhizomatic Authorship in America’s Online Unintentional Narratives,” Andrew Ferguson, Univ. of Tulsa

For abstracts, visit https://mavspace.uta.edu/guertin/mla-models-of-authorship.html.

655. Today’s Students, Today’s Teachers: Technology

10:15–11:30 a.m., 410, Philadelphia Marriott

Presiding: Christine Henseler, Union Coll., NY

- “Ning: Teaching Writing to the Net Generation,” Nathalie Ettzevoglou, Univ. of Connecticut, Storrs; Jessica McBride, Univ. of Connecticut, Storrs

- “Online Tutoring from the Ground Up,” William L. Magrino, Jr., Indiana Univ. of Pennsylvania; Peter B. Sorrell, Rutgers Univ., New Brunswick

- “Using Facebook for Online Discussion in the Literature Classroom,” Emily Meyers, Univ. of Oregon

676. The Impact of Obama’s Rhetorical Strategies

12:00 noon–1:15 p.m., Grand Ballroom Salon K, Philadelphia Marriott

Presiding: Linda Adler-Kassner, Eastern Michigan Univ.

- “Keeping Pace with Obama’s Rhetoric: Digital Ecologies in the Writing Program and the White House,” Shawn Casey, Ohio State Univ., Columbus

- “Classroom 2.0 Connecting with the Digital Generation: Pedagogical Applications of Barack Obama’s Rhetorical Use of Twitter,” Jeff Swift, Brigham Young Univ., UT

- “Obama Online: Using the White House as an Exemplar for Writing Instruction,” Elizabeth Mathews Losh, Univ. of California, Irvine

- “Made Not Only in Words: The Politics and Rhetoric of Barack Obama’s New Media Presidency as a Moment for Uniting Civic Rhetoric and Civic Engagement,” Michael X. Delli Carpini, Univ. of Pennsylvania; Dominic DelliCarpini, York Coll. of Pennsylvania

Respondent: Linda Adler-Kassner

703. Teaching Literature by Integrating Technology

12:00 noon–1:15 p.m., Commonwealth Hall A1, Loews

Presiding: Peter Höyng, Emory Univ.

- “Tatort Technology: Teaching German Crime Novels,” Christina Frei, Univ. of Pennsylvania

- “Old Meets New: Teaching Fairy Tales by Using Technology,” Angelika N. Kraemer, Michigan State Univ.

- “The Role of E-Learning in Excellence Initiatives: Ideal Scenarios and Practical Limitations,” David James Prickett, Humboldt-Universität

Respondent: Caroline Schaumann, Emory Univ.

706. Digital Africana Studies: Creating Community and Bridging the Gap between Africana Studies and Other Disciplines

12:00 noon–1:15 p.m., Adams, Loews

Presiding: Zita Nunes, Univ. of Maryland, College Park

Speakers: Kalia Brooks, Inst. for Doctoral Studies in the Visual Arts; Bryan Carter, Univ. of Central Missouri; Kara Keeling, Univ. of Southern California

For abstracts, visit www.ach.org/mla/mla09/ after 1 Dec.

710. Frontiers in Business Writing Pedagogy: New Media and Literature Strategies

12:00 noon–1:15 p.m., 308, Philadelphia Marriott

Presiding: James K. Archibald, McGill Univ.

- “New Media and Business Writing,” Harold Henry Hellwig, Idaho State Univ.

- “Bringing Second Life to Business Writing Pedagogy,” R. Dirk Remley, Kent State Univ., Kent

- “The Literature of Business: An Approach to Teaching Literature-Based Writing-Intensive Courses,” Scott J. Warnock, Drexel Univ.

Respondent: Mahli Xuan Mechenbier, Kent State Univ., Kent

For abstracts, write to kwills@iupuc.edu.

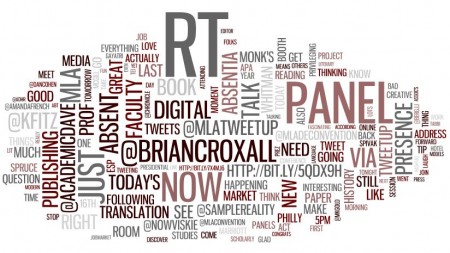

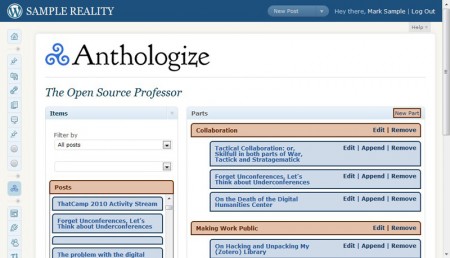

[I was on a panel called “The Open Professoriat(e)” at the 2011 MLA Convention in Los Angeles, in which we focused on the dynamic between academia, social media, and the public. My talk was an abbreviated version of a post that appeared on samplereality in July. Here is the text of the talk as I delivered it at the MLA, interspersed with still images from my presentation. The original slideshow is at the end of this post. Co-panelists Amanda French and Erin Templeton have also posted their talks online.]

[I was on a panel called “The Open Professoriat(e)” at the 2011 MLA Convention in Los Angeles, in which we focused on the dynamic between academia, social media, and the public. My talk was an abbreviated version of a post that appeared on samplereality in July. Here is the text of the talk as I delivered it at the MLA, interspersed with still images from my presentation. The original slideshow is at the end of this post. Co-panelists Amanda French and Erin Templeton have also posted their talks online.] While Strategy comes from the Greek στρατηγóς, meaning commander or general. A general is supposed to be a big-picture kind of guy, so I guess that makes sense. And I suppose the arrangement of individual elements comes close to the modern day meaning of a military tactic.

While Strategy comes from the Greek στρατηγóς, meaning commander or general. A general is supposed to be a big-picture kind of guy, so I guess that makes sense. And I suppose the arrangement of individual elements comes close to the modern day meaning of a military tactic.

Aside from the free chips I got for checking into a

Aside from the free chips I got for checking into a